Introduction

This will be the first in a series of blog posts to look at Amazon Web Services (AWS) from a ground up approach. The aim of this series will be to introduce concepts used in AWS and to provide some approaches to using AWS and to inform of the options available. I won't be covering everything, as I am for this series to be used as a jumping off point for further research.

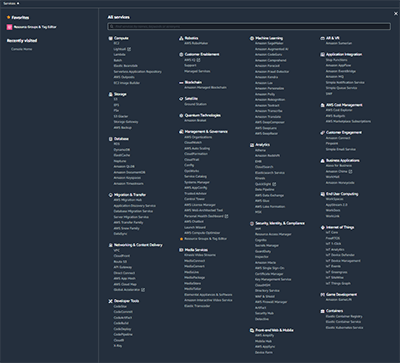

AWS have a huge number of products and offerings available and when you first consider moving into the cloud, so that knowing which services you need to use and what the best practices are, can be overwhelming as there is so much choice.

Do you just need to run a standard website? Well then, you could use EC2, ECS, EKS, Elastic Beanstalk, Lightsail, static website hosting using S3, and more I'm sure I'm forgetting.

To illustrate this point, this is the (recently redesigned) Services menu as of the time of writing. I had to zoom to 50% just to fit it all in and still had to scale the image.

This list covers the main product categories, there are still more features underneath. EC2 for example has security groups, auto scaling, load balancers, etc. The list goes on.

And that's before you factor in Amazon's yearly Re:Invent where they add new products and announce new features, often making over a thousand or more announcements over the course of the conference.

Knowing where to start can be challenging. That's where this series comes in.

I've got some plans over the next few weeks and months to grow this series alongside other standalone posts to offer a view of AWS and how you can make it work for you.

As I mentioned in my first blog post, I do work for an AWS Partner CirrusHQ, so some advice will mention working with a partner where that makes sense, however I also want this series to be a good starting point in your cloud journey.

What is AWS and why should i use it?

To go back to basics for a short section, you may already be aware of AWS and other cloud computing providers, but it's always worth a bit of background information.

AWS is Amazon's web services division. They run data centres throughout the world and beyond using them for hosting their own sites (A lot of the early services for AWS came out of a desire for a product usable by Amazon themselves), the data centres are used to provide services to other companies or individuals.

Cloud Computing

AWS is an example of a Cloud Computing provider. They are generally at the top of the cloud computing market, with the largest market share, with Azure and GCP being in 2nd and 3rd place. As with other tech companies, they will be better at some areas than the competition and worse in others.

The main difference between a Cloud Computing provider and a traditional Data Centre provider is the method of purchasing the services. Cloud Computing mainly works on a consumption model, where you only pay for what you use, rather than paying a fixed price for access to a set amount of resources.

You may come across the differences between the models expressed as CAPEX vs OPEX. CAPEX is Capital Expenditure where you are purchasing something at a longer cadence or as a one off expense that can be taken as a Capital Investment. So for example you may have an 18 Month contract with a traditional Data Centre provider for a specific server that you have exclusive access to. OPEX is Operational Expenditure, which is where your costs to run the resources are charged at a shorter cadence, such as monthly or even by the hour.

As Cloud Computing is concerned with the pay for what you use, this is more of an operational expense. So you can vary your usage each month and not have to pay for everything up front.

There are some areas where you can still use CAPEX, such as in purchasing Reserved Instances.

This shift in financial models is something you need to be very aware of in Cloud Computing. There are some huge advantages in flexibility and cost. This includes being able to spin up just the resources you need for the time you need them. This allows for much better experimentation and for tighter cost controls. You can for example turn off machines you don't need after working hours so that they aren't running and costing you money when you won't be using them.

This scalability is a bit of a double-edged sword. You gain great flexibility in scaling and resource capacity, however you can quickly rack up costs if you aren't careful. If you don't keep a tight control over where your resources can scale to, then they can scale out of control. For example if you put in an AutoScaling group and set the maximum number to some value you think will never be hit in a million years, does mean there is a risk that you may receive a flood of traffic and the scaling group will do its job and scale all the way up to your maximum.

There are thankfully a number of ways you can control and monitor these activities, but if you are coming from a traditional data centre environment where you have bought one server and if you max it out 24x7, its all covered in the initial payment. Going up or down in usage doesn't impact you financially. It can be quite an adjustment moving to a world where scaling is built into almost every service so that your needs can grow. This scalability can lead to huge cost savings (up to 70% in some areas) but you can also scale past the amount you would normally have paid. You will have had much more resources for the same amount, but costs can quickly increase if you are not careful.

AWS specifically

All of the above is equally as true for other Cloud Computing providers as it is for AWS. AWS has at its core the philosophy of being able to pay only for what you use and to be able to scale to what you need.

One of AWS's core strengths is how their products can be used together to solve problems. Their teams work from a builders perspective, as they use these services themselves first and want to enable end users to build out applications and solutions. Most, if not all of AWS's new product launches have started life as an internal project in some form or another. S3 and DynamoDB for example were very early products that AWS used to drive the Amazon website.

Where Next?

So, as I mentioned this is the first post in a series, aimed at helping get started on AWS. In terms of technical skill level, I am aiming this at people with experience in at least setting up traditional servers or deploying code in some way. I'll be explaining things like what EC2 instances are, how security groups work etc, but won't be going into detail of whether to use Apache or Nginx.

So that said, my next entry in this series will likely be on EC2 and some basic setup, including VPCs, Security Groups and similar setups. Beyond that though, I'm looking for a bit of input.

I've listed some potential categories below. I know this is just a new blog so I might not get any feedback this early, but anything is welcome. (I'm using Google Forms as it seems the most straightforward and not limited poll option, but feel free to suggest another method if you have a better one).

Update: I've replaced the Google Form with a link for now, just until I can get the embed to behave or find / create a better poll option. Here are the options on the form:

Serverless Architecture Docker / Elastic Compute Service (ECS) / Elastic Container Registry (ECR) Elastic Beanstalk CloudWatch S3 / Storage Lambda AWS Batch