Working in the cloud can be complex and challenging, with many ways to utilise the services that someone like AWS offers. There are ways to use cloud resources in similar ways to traditional on-premise environments, but often there are drawbacks and unexpected pitfalls that it's easy to fall victim to.

Even if you avoid the most common pitfalls, utilising all that cloud computing has to offer is not a straightforward task and may require some changes, even for workloads that have been on the cloud for a while. These changes can range from simple changes, like ensuring you don't use your Root account for anything other than tasks that are only possible by using the Root account, up to much larger changes such as re-architecting your workload to take advantage of serverless computing using API Gateway and Lambdas as one example.

To help navigate these potential issues, AWS came up with the Well-Architected Framework.

Well-Architected Framework

The Well architected framework comprises several elements:

- The Well-Architected white paper

- Hands-on Labs

- The Well Architected Tool

- Well-Architected Lenses (Domain specific white papers, such as the Machine Learning Lens)

- A set of General Design Principles

AWS also run a Well Architected Partner Program which allows AWS Partners to provide expertise and help in using the Well Architected resources to enhance their workloads. I'll explain more on this near the end. I work for an AWS Partner and I am a Well Architected Lead, however this post aims to provide an understanding of what the Well-Architected framework is and where it could be useful.

Background

The Well-Architected framework was developed in AWS initially as an internal tool. The reasoning behind creating the framework to standardise approaches to developing new applications and for their Solution Architects to provide a standard set of recommendations along common lines. The framework has evolved a few times, in October 2015 they released the white papers, then in December 2018, the Well-Architected Tool was released.

What is the Well-Architected Framework?

The core of the Well-Architected Framework is the advice presented in the white papers, that will cover several areas to provide you with advice on a wide range of topics (more on these below in the 5 pillars section). These white papers are then further enhanced by the Lenses, which are domain specific advice for areas such as Machine Learning, Internet of Things, Serverless and others.

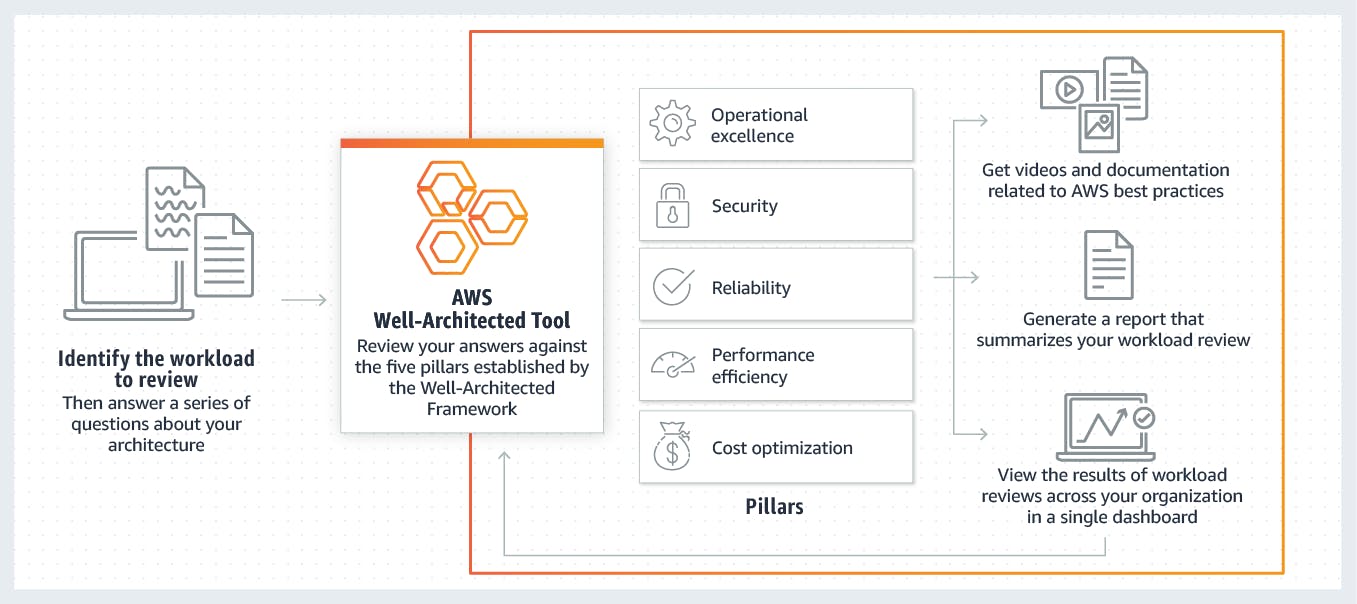

The Well Architected Tool is a free service in the AWS Console that allows you to go through each of the key areas and asks questions to allow you to think of specific areas of your workload and identify any gaps in your process that will improve each of the focus areas.

There are also hands-on labs available to cover areas that will help you improve your workloads.

What the Well-Architected Framework wont do.

The Well-Architected Framework is designed to help you identify any number of workloads by looking at how you design, deploy and support those workloads. It's aim is to provide general principles about how to go about designing systems and architecture that is scalable, highly available and efficient, while also designed to be supported and cost controlled.

However, the framework is not prescriptive, and it is not intended as an auditing tool. There won't be specific recommendations on which services to use or which deployment system is the best, as these things are usually very situation dependant. There can be workloads where one approach is better than another, while other workloads are the opposite.

AWS also don't provide any guarantees on a workload being 'Well-Architected', the tool is there to provide areas to focus on and discuss, and the advice changes over time.

The framework provides you with approaches to make informed decisions and gives you suggestions on ways to find out which approach is best for you.

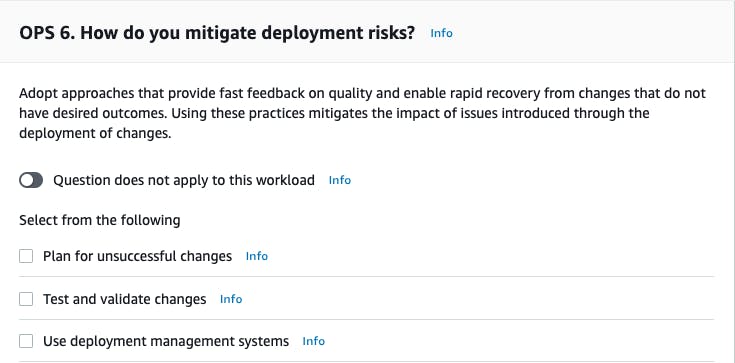

As an example, here is an extract from one of a question targeting deployment risks. One of the suggested areas to focus on is to use a deployment management system, but there is no mention of which one to use. AWS do have deployment management systems, such as CodeDeploy, but they also realise that other CI/CD options are available and may be better than their own offering for specific use cases.

Design Principles

AWS have provided several General Design Principles that guide the rest of the advice from the framework.

Their definitions are here: https://wa.aws.amazon.com/wat.design_principles.wa-dp.en.html

I have also detailed them below, with my own explanations.

- Stop guessing your capacity needs

Designing systems to cope with the capacity that you might need this week never mind in 6 months or a year's time is an almost impossible task. If you over provision, you will end up with expensive resources you aren't using, and if you under provision, your user experiences will suffer. Cloud computing allows you to use as much or as little as you need and respond to changes in demand if they are designed with scalability in mind.

- Test systems at production scale

The flexibility of Cloud Computing allows for your test platforms to be at the same scale as your production platform and only be available when you need it. Test systems that aren't being used overnight are just wasting money. Scaling to the same size as production also allows you to catch issues that may not happen in the test environment. (for example, issues that only occur when using multiple servers might not show up if you only have 1 test server).

- Automate to make architectural experimentation easier

Automation allows you to run with potential changes in much the same way as scaling your test systems, so that you can figure out if a new change or tweak to some setting will work for you without having to provision resources for longer periods.

- Allow for evolutionary architectures

Similarly to the above, as your systems grow over time, you will want to make changes and add new architecture to respond to changes in usage and business decisions. Cloud computing makes this a lot easier by allowing for scaling changes and automation.

- Drive architectures using data

Monitoring your architecture allows you to make informed decisions about what is working and what isn't. Finding out where you may have more latency in a web solution for example might drive a decision to use a different type of load balancing.

- Improve through game days

Game Days are a useful simulation exercise to replicate your architecture and simulate what would happen when things go wrong. This has multiple benefits, such as getting your organisation ready to deal with real events and finding out where your workloads might break. It's much better for something to fail during a Game Day than during a normal working day where the impact is real.

The 5 Pillars

The Well-Architected Framework is broken into 5 pillars that each focus on a specific area and provide advice on specific areas. The Operational Excellence pillar is the first of these as AWS view this pillar as underpinning the rest. It's tempting to just focus on the Security or Cost Optimisation pillars, but working through them all as an initial baseline is recommended as a number of the recommendations link to and support each other.

I will give some information on each pillar here and will do deeper dives into each pillar in the future, but it's also worth reading the white paper on the pillars.

https://d1.awsstatic.com/whitepapers/architecture/AWS_Well-Architected_Framework.pdf

Operational Excellence

Operation excellence is focused on how you support a workload. This pillar is designed to look at your overall processes as a business, your organisational culture and how your teams are set up to give advice on where improvements could be made.

The Operational Excellence pillar covers priorities, both internal and external and how you decide between them as well as areas such as supporting your workload, automation in response to events, and daily operations.

Security

The security pillar is concerned with all things security related that apply to your workload. This will include the usual security focused areas such as encryption at rest and encryption in transit, but it will also areas such as cover human and computer access, assessing the latest threats, and data classification.

Reliability

The reliability pillar is aimed at ensuring your workload can cope with what is thrown at it. This pillar will cover areas such as scaling to respond to demand, fixing things when they do go wrong, backups, planning for changes and more.

Performance Efficiency

The performance efficiency pillar is focused on ensuring that you are getting the most out of your Workload. This will cover areas such as right-sizing (where you ensure the resources you are using are just the right fit for what you need) as well as monitoring performance and tracking data to drive architectural decisions.

Cost Optimisation

The cost optimisation pillar is focused on cost as a whole, however this isn't all just around reducing costs. This pillar will provide ways to reduce cost (such as scaling down resources when you don't need them as user traffic is low) and how to decide what architectural decisions should be made with an eye to cost, as well as understanding just how your monthly spend breaks down.

The Well Architected Tool

The well architected tool itself goes through each of the 5 pillars and asks questions about how you support your workload and your processes. Once the review is completed a number of areas will be highlighted split into High Risk and Medium Risk.

Almost every workload will end up with some High Risk Items (HRIs). Even AWS's own internal workloads still have some, as there are decisions that are made to be aware of a risk and plan for it rather than eliminating it. This covers various areas and some areas are almost the opposite of each other. For example, you could mitigate scaling risks by having lots of servers, but then cost would be a risk item. It's all about knowing the risks and making decisions about which ones you want to concentrate on.

Hands on Labs

AWS have a number of free Hands-on labs that are available for free that will show you some examples of how you can solve some risks that arise, by designing scalable solutions and other recommendations that fit with the principles of the framework.

The full labs are available here: https://www.wellarchitectedlabs.com/. They are organised into levels of complexity / knowledge required.

Well-Architected Partner Program

As I mentioned at the start of this post, AWS also run the Well-Architected Partner Program, where the same tools and frameworks are used by an AWS Partner to perform a review of your workload, usually though a workshop involving each of the key stakeholders in your teams. This is usually designed to be as wide as possible, so covering your developers, operations, and financial teams as well as C-level executives. Anyone who has an involvement in the direction of the Workload is good to bring to the table as quite often discussing the Workload is a good way to bring to the surface knowledge that may not be known across the teams and provide informed decisions.

AWS also provide credits to have these reviews performed, with the stipulation that any remediation work to enhance the workload and reduce the High Risk Items must reduce at least 25% of the HRIs and the work must be carried out by a partner.

If you are interested in hearing more about the partner I work for and what we can do, feel free to read through our Well-Architected offering and contact us for a discussion:

https://www.cirrushq.com/solutions/well-architected/